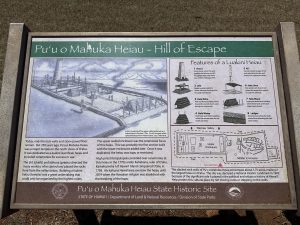

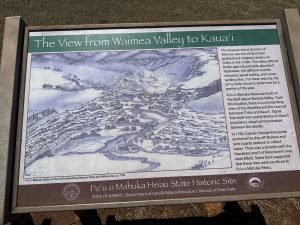

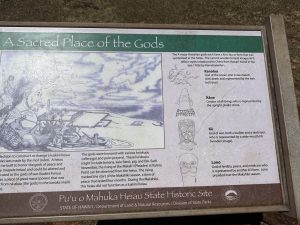

Nestled in the verdant landscapes of Oahu, Puu o Maluka Heiau—also known as the “Hill of Escape”—stands as a quiet yet powerful testament to Hawaii’s rich cultural and spiritual history. This ancient Hawaiian temple, or heiau, was once a place of refuge and a sacred site for religious ceremonies. As you ascend the hill, you can feel the history underfoot, with every step bringing you closer to a time when the site was a haven for those seeking protection from conflict or spiritual guidance. The view from the top offers not only breathtaking panoramas of the surrounding area but also a profound sense of peace and connection to the land. With its rugged beauty and sacred significance, Puu o Maluka Heiau offers visitors a deeper understanding of the spiritual traditions that shaped ancient Hawaii. Whether you’re here to explore its history or simply to take in the quiet beauty, the Hill of Escape feels like stepping into another world—one where time slows, and the past speaks softly through the wind.

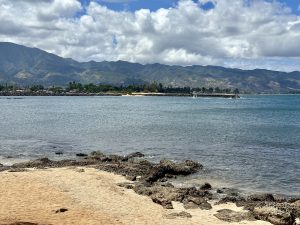

Capturing the Spirit of Haleiwa Harbor: A Glimpse into Hawaii’s Serene Waters

Haleiwa Harbor is more than just a picturesque marina nestled on the North Shore of Oahu; it’s a gateway to the true heart of Hawaiian culture and natural beauty. As your camera lens hovers over the gentle sway of the boats, you’ll feel the rich history of this quiet harbor. From colorful fishing vessels bobbing on the surface to sleek outrigger canoes waiting for the next adventure, every snapshot captures a moment of tranquility that feels timeless. The early morning light paints the harbor in soft hues, perfect for those chasing the perfect photo. Meanwhile, in the distance, the dramatic outline of the Waianae Mountains provides a breathtaking backdrop to the serene waters. Whether you’re an aspiring photographer or simply soaking in the views, Haleiwa Harbor offers the kind of quiet beauty that speaks directly to the soul. The charm of the area is undeniable, a perfect blend of calm ocean life and the untold stories of sailors who’ve passed through its waters.

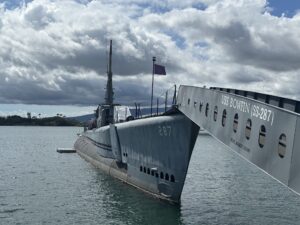

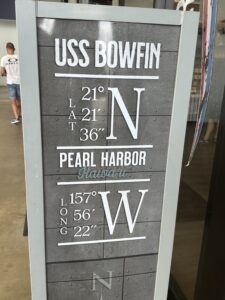

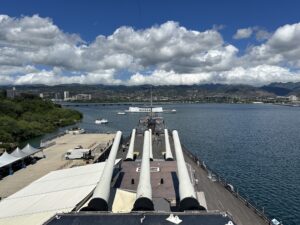

USS Bowfin: The Silent Hunter of Pearl Harbor

The USS Bowfin is more than just a submarine; it’s a powerful symbol of resilience, bravery, and the silent strength that prowled beneath the waves during World War II. Nicknamed the “Pearl Harbor Avenger,” this submarine was launched exactly one year after the attack on Pearl Harbor, on December 7, 1942. With 9 successful war patrols to its name, the Bowfin earned its reputation as a deadly force, sinking dozens of enemy vessels and showcasing the importance of stealth in naval warfare. Today, the USS Bowfin serves as a floating museum in Pearl Harbor, offering visitors a chance to step aboard and experience life in the cramped, intense quarters where brave sailors navigated the dangers of the deep. Walking through its narrow passageways, visitors can feel the weight of history and get a glimpse into the silent warfare that shaped the Pacific theater. For history buffs and adventurers alike, the Bowfin isn’t just a relic; it’s a tribute to the quiet courage of those who served beneath the surface.

Fort DeRussy: Where History Meets Paradise

Fort DeRussy, nestled along the vibrant coastline of Waikiki, is more than just a patch of lush greenery—it’s a site that blends Hawaii’s deep-rooted military past with the present-day allure of paradise. Originally established as a coastal defense fort in 1908, Fort DeRussy played a crucial role in Hawaii’s military history, especially during World War II. Today, this former stronghold has transformed into a serene public park that still holds remnants of its past, including the U.S. Army Museum of Hawaii. Walking through the shaded pathways, you’re reminded of the island’s resilience while the nearby golden sands and turquoise waters provide the perfect backdrop for reflection. Fort DeRussy isn’t just a park; it’s a bridge between Hawaii’s rich history and its modern-day tropical allure. Whether you’re a history buff or someone just seeking a peaceful escape amidst Waikiki’s bustling scene, Fort DeRussy offers the best of both worlds.

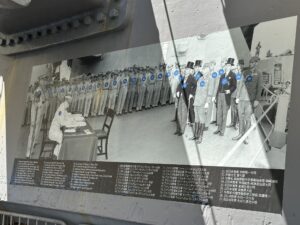

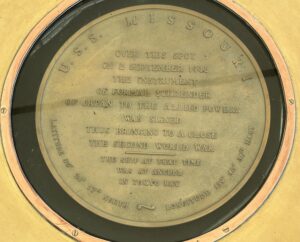

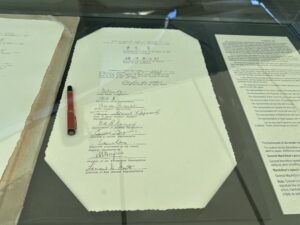

USS Missouri: The Mighty Mo That Ended a World War

The USS Missouri, affectionately known as the “Mighty Mo,” holds a legendary place in American naval history. This massive battleship, launched in 1944, became the setting for one of the most significant moments in modern history—the surrender of Japan, effectively ending World War II. On September 2, 1945, amidst the gray skies and the weight of history, representatives from Japan signed the formal documents of surrender on her deck in Tokyo Bay. As the last battleship commissioned by the United States, Missouri wasn’t just a symbol of power but also of closure and peace.

Beyond her historic role, the USS Missouri continued to serve the U.S. Navy in the Korean War and later in Operation Desert Storm, showcasing her incredible adaptability over decades. Today, she rests peacefully as a museum ship at Pearl Harbor, where visitors from around the world can walk the same deck that once witnessed the end of a global conflict. Standing in her shadow, you can’t help but feel the gravitas of history and the awe-inspiring power that the Mighty Mo once wielded. More than just a battleship, the USS Missouri represents resilience, strength, and the enduring spirit of those who fought for peace.

USS Oklahoma BB-37: A Story of Valor and Tragedy

The USS Oklahoma BB-37 stands as a haunting yet heroic chapter in naval history, one often overshadowed by the USS Arizona but no less significant. On December 7, 1941, when the Japanese attack on Pearl Harbor began, the Oklahoma was hit by several torpedoes, causing the massive battleship to capsize in just 12 minutes. Trapped inside the hull, over 400 sailors lost their lives, many never having a chance to escape. Despite the chaos, there were stories of bravery and sacrifice as sailors fought to save their comrades. In the years that followed, efforts were made to right the ship, but the damage was so severe that it was eventually decommissioned and sold for scrap. Though the Oklahoma is no longer physically present, her legacy lives on through the memories of those who served aboard and the survivors who bore witness to that fateful day. Today, the USS Oklahoma Memorial stands near the USS Missouri at Pearl Harbor, ensuring that this battleship’s story of valor and tragedy will never be forgotten.

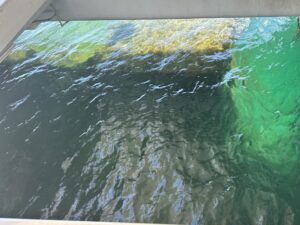

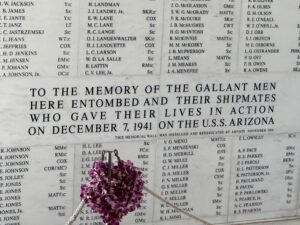

Remembering the USS Arizona Memorial: A Journey Through Time and Honor

The USS Arizona Memorial, standing quietly over the sunken remains of the battleship, offers visitors a profound connection to history and loss. Located at Pearl Harbor, Hawaii, this floating tribute honors the 1,177 sailors and marines who perished during the December 7, 1941, attack that propelled the United States into World War II. Though decades have passed, the wreckage still weeps oil into the waters—often referred to as the “tears of the Arizona”—reminding us of the lives cut short on that fateful day. Walking through the memorial, the atmosphere feels heavy, not with sorrow alone, but also with a deep respect for the bravery and sacrifice of those who gave everything. For many, visiting the USS Arizona Memorial is not just a lesson in history but a deeply personal journey, sparking reflection on the cost of freedom and the resilience of the human spirit. Whether you’re a history buff or just someone who seeks to pay their respects, this landmark is a place where stories are remembered, and heroes remain in the hearts of those who visit.

Anchored in History: The USS Arizona’s Recovered Anchor and Its Symbolism

The recovered anchor of the USS Arizona is more than a piece of metal; it’s a symbol of strength, sacrifice, and remembrance. After the devastating attack on Pearl Harbor, much of the USS Arizona was left submerged, but one of its massive anchors was salvaged, now standing as a poignant reminder of the battleship’s legacy. This 19,585-pound artifact, which once held the mighty Arizona steady, now anchors the memory of those who served aboard her. Today, the anchor sits at the entrance to the Pearl Harbor Visitor Center, where it invites contemplation and reflection from those who visit. Standing next to it, you’re reminded of the sheer scale of the ship and the enormity of the event that claimed so many lives. It’s not just an anchor—it’s a bridge between the past and the present, a tangible connection to the history that shaped our world. The USS Arizona’s anchor is a solemn yet inspiring tribute to resilience and a lasting marker of America’s strength and perseverance through adversity.

Prince Jonah Kūhiō Kalanianaʻole: A Monument to Hawaii’s Beloved Prince

Standing proudly in Waikiki, the statue of Prince Jonah Kūhiō Kalanianaʻole pays tribute to one of Hawaii’s most cherished leaders. Known as the “People’s Prince,” Kūhiō dedicated his life to the well-being of Native Hawaiians, advocating for their rights both as a prince and as a delegate to the U.S. Congress. His efforts led to the passage of the Hawaiian Homes Commission Act, which secured land for Native Hawaiians and shaped the future of the islands. The statue, located near Kūhiō Beach, captures his regal presence, dressed in traditional Hawaiian attire, gazing out toward the ocean. For locals, it’s a reminder of his lasting legacy and tireless work to uplift his people. Visitors to Waikiki often stop to admire the statue, unaware that they are standing in the presence of a figure who shaped Hawaii’s history. Prince Kūhiō’s spirit of aloha continues to inspire, and his statue serves as a bridge between Hawaii’s past and present, inviting all who pass by to learn about the prince who never stopped fighting for his people.

Makua and Kila: The Beloved Guardians of Waikiki

In the heart of Waikiki, the bronze statue of Makua and Kila captures a timeless moment of Hawaiian storytelling and culture. This charming sculpture depicts Makua, a Hawaiian surfer, and Kila, a sealion, both gazing out toward the ocean as if ready for another adventure. Situated near the Honolulu Zoo, the statue is not just a beautiful work of art—it’s a symbol of companionship, loyalty, and the deep connection between the people of Hawaii and the sea. For locals and visitors alike, the statue holds a special place, offering a peaceful reminder of the island’s rich history and the legendary tales passed down through generations. Whether you’re stopping by for a photo or simply to admire the craftsmanship, Makua and Kila bring a quiet, comforting presence to Waikiki’s bustling streets. Their story continues to inspire, standing tall as a testament to the enduring spirit of aloha.

Hickam Airfield: Where Planes Pass Over Your Drive

Hickam Airfield, part of Joint Base Pearl Harbor-Hickam on Oahu, offers one of the most unique sights you’ll ever see—a moment where airplanes fly directly over the road. As you drive along the nearby route, you’ll experience the surreal sight of massive aircraft, including military jets, passing just overhead as they take off or land. It’s not your typical drive-by scenery, and the experience can be both thrilling and humbling. This airfield has a rich history, having played a critical role during World War II, and today, it remains an essential part of military operations in the Pacific. But for everyday drivers, it’s that surreal moment when the roar of an aircraft fills the air, and a shadow sweeps across the road, making you feel like you’re part of something bigger. Whether you’re a military history buff or just passing through, the sight of planes cruising over the road at Hickam Airfield is an unforgettable experience that perfectly blends Oahu’s natural beauty with its historical and modern significance.

Hickam Airfield, part of Joint Base Pearl Harbor-Hickam on Oahu, offers one of the most unique sights you’ll ever see—a moment where airplanes fly directly over the road. As you drive along the nearby route, you’ll experience the surreal sight of massive aircraft, including military jets, passing just overhead as they take off or land. It’s not your typical drive-by scenery, and the experience can be both thrilling and humbling. This airfield has a rich history, having played a critical role during World War II, and today, it remains an essential part of military operations in the Pacific. But for everyday drivers, it’s that surreal moment when the roar of an aircraft fills the air, and a shadow sweeps across the road, making you feel like you’re part of something bigger. Whether you’re a military history buff or just passing through, the sight of planes cruising over the road at Hickam Airfield is an unforgettable experience that perfectly blends Oahu’s natural beauty with its historical and modern significance.

Bellows Beach: Oahu’s Best-Kept Secret for Serenity and Sun

Tucked away on the windward side of Oahu, Bellows Beach is a tranquil gem that feels like your own private paradise. Known for its soft, powdery white sand and crystal-clear waters, Bellows is a haven for those seeking a peaceful retreat away from the busier beaches of Waikiki. The gentle waves make it ideal for swimming, boogie boarding, or just floating lazily in the Pacific. What sets Bellows Beach apart is its dual identity—on weekdays, it’s a public treasure, but on weekends, it’s part of a military base, offering a rare opportunity for civilians to enjoy its beauty. Surrounded by the majestic Ko’olau Mountains, it’s not just the views that are breathtaking but the sense of calm that washes over you the moment your toes hit the sand. Whether you’re strolling along the shore or soaking in the sun, Bellows Beach feels like the ultimate escape, where time slows down and the beauty of Oahu is yours to savor.

Exploring the Tetsuo Harano Tunnel: A Hidden Gem of Oahu’s H3

Nestled in the lush landscape of Oahu, Hawaii, the Tetsuo Harano Tunnel on the H3 Freeway is more than just a passage through the island—it’s a testament to engineering marvel and natural beauty. Opened in 1997, this 4.1-mile-long tunnel cuts through the Ko’olau Mountains, offering a unique journey beneath a verdant canopy. The Tetsuo Harano Tunnel stands as a symbol of modern infrastructure blending seamlessly with Hawaii’s striking terrain. As you drive through, you’re enveloped in a cool, dimly lit world that contrasts sharply with the vibrant, sun-drenched scenery outside. It’s not just a commute; it’s a brief, fascinating escape into the heart of Oahu’s geological wonders. The tunnel is named in honor of Tetsuo Harano, a visionary civil engineer whose work helped realize this ambitious project. Whether you’re a local or a visitor, the Tetsuo Harano Tunnel provides a memorable and awe-inspiring gateway through one of the island’s most stunning natural backdrops.

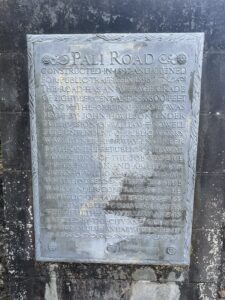

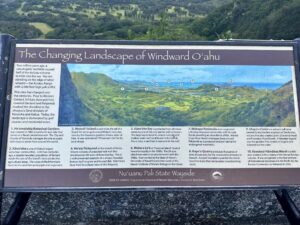

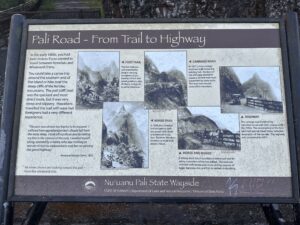

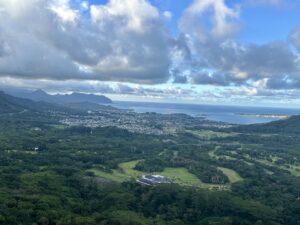

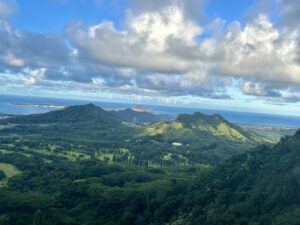

Journey Through History: Nu’uanu Pali State Wayside and the Pali Highway

Nu’uanu Pali State Wayside is more than just a scenic stop on Oahu’s Pali Highway; it’s a place where nature, history, and culture converge. As you drive up the Pali Highway, you’re surrounded by lush greenery and towering cliffs, but nothing compares to the view that greets you at the Nu’uanu Pali lookout. From this windswept vantage point, you can see sweeping vistas of Oahu’s windward coast, with the emerald Koolau Mountains on one side and the turquoise waters of Kaneohe Bay on the other. This spot holds deep historical significance, as it was the site of the famous Battle of Nu’uanu, where King Kamehameha I united the Hawaiian Islands. Standing at the lookout, with the trade winds rushing through, you can almost feel the echoes of the past. Whether you’re exploring the rich history or simply taking in the breathtaking views, a drive along the Pali Highway and a stop at Nu’uanu Pali State Wayside is an unforgettable experience that offers a unique glimpse into the beauty and heritage of Hawaii.

Escape to Serenity at Holana Beach Cove

Tucked away on Oahu’s eastern shore, Holana Beach Cove is a peaceful retreat that feels miles away from the bustling tourist hubs. This secluded spot is a hidden treasure for those in search of solitude and natural beauty. The small cove is framed by dramatic rock formations, creating a serene, sheltered space where the turquoise waters gently lap at the sandy shore. Holana Beach Cove isn’t as well-known as Oahu’s other beaches, which makes it perfect for visitors who want to escape the crowds and enjoy a quiet day by the ocean. Whether you’re up for snorkeling in its calm waters, exploring the tide pools, or simply lounging on the sand with a book, the cove offers a rare slice of tranquility. Watching the sunrise here is an unforgettable experience, with the early morning light casting golden hues across the waves. Holana Beach Cove may be off the beaten path, but that’s exactly what makes it so special.

Pali Highway Lookout: A View You’ll Never Forget

The Pali Highway Lookout, perched high above Oahu’s lush valleys, is one of the island’s most breathtaking spots. Located along the Pali Highway, this historic site offers sweeping views of the windward coast, towering cliffs, and the emerald-green Ko’olau Mountains. But it’s not just the scenery that makes this place special. The Pali Lookout is steeped in history, being the site of the famous Battle of Nu’uanu, where King Kamehameha I united the Hawaiian Islands. Standing there, you can feel the winds rushing through the mountains—winds so strong, they’ll take your breath away. The lookout is easy to access, and it’s a quick stop worth making on any Oahu road trip. Whether you’re snapping photos or simply taking in the view, the Pali Lookout is one of those places that makes you feel truly connected to Hawaii’s natural beauty and history.

The Pali Highway Lookout, perched high above Oahu’s lush valleys, is one of the island’s most breathtaking spots. Located along the Pali Highway, this historic site offers sweeping views of the windward coast, towering cliffs, and the emerald-green Ko’olau Mountains. But it’s not just the scenery that makes this place special. The Pali Lookout is steeped in history, being the site of the famous Battle of Nu’uanu, where King Kamehameha I united the Hawaiian Islands. Standing there, you can feel the winds rushing through the mountains—winds so strong, they’ll take your breath away. The lookout is easy to access, and it’s a quick stop worth making on any Oahu road trip. Whether you’re snapping photos or simply taking in the view, the Pali Lookout is one of those places that makes you feel truly connected to Hawaii’s natural beauty and history.

Discover the Magic of Chinaman’s Hat: Oahu’s Hidden Gem

Nestled off the northeastern coast of Oahu, Chinaman’s Hat—also known as Mokoli‘i Island—stands as a small yet captivating island rich in Hawaiian legend and natural beauty. Named for its resemblance to the conical hats worn by Chinese laborers, this iconic island is more than just a picturesque landmark. Adventurous visitors can kayak or paddleboard across the calm waters of Kaneohe Bay, while low tide offers a rare opportunity to walk to the island’s shores. Once there, hiking to the top of Mokoli‘i rewards you with stunning panoramic views of the Ko’olau Mountains and the pristine coastline. For those less inclined to climb, the surrounding coral reefs make for excellent snorkeling. Whether you’re chasing an epic sunset or seeking a quiet escape from Oahu’s bustling beaches, Chinaman’s Hat offers a tranquil slice of paradise and a unique way to connect with Hawaii’s natural beauty.

Brewing Aloha: The Maui Brewing Company Experience

Maui Brewing Company isn’t just a place to grab a cold one; it’s where the essence of Hawaii is poured into every glass. Nestled in the heart of the islands, this local gem has grown from a small craft brewery into Hawaii’s largest, but it’s managed to keep that laid-back, island vibe that makes it special. Founded in 2005, Maui Brewing Company is committed to sustainability and community, using solar power and local ingredients to create brews that are as refreshing as a cool ocean breeze. From the iconic Bikini Blonde Lager to the bold Coconut Hiwa Porter, each sip tells a story of the islands, blending tradition with innovation. But what really sets them apart is their dedication to preserving the spirit of Aloha, not just in their beer, but in everything they do. Whether you’re a local or a visitor, a visit to Maui Brewing Company is more than just a stop for a drink; it’s a taste of Hawaii’s soul.

Riding the Waves of Art: The Craft of Eduardo Bolioli Surfboards

Eduardo Bolioli’s surfboards aren’t just vessels for the ocean—they’re canvases that capture the vibrant spirit of surfing and the deep connection between the rider and the sea. Born in Uruguay and shaped by the waves of Hawaii, Bolioli’s journey as an artist and shaper is a testament to his love for both art and surf culture. His boards are hand-painted masterpieces, each one a unique blend of bold colors, intricate designs, and the energy of the ocean. But these surfboards aren’t just about aesthetics; they’re crafted for performance, designed to glide effortlessly across the waves with the precision that only a true surfer and artist could achieve. Owning a Bolioli surfboard is like riding a wave of creativity, where every stroke of paint and curve of the board reflects the passion and soul of a man who lives and breathes the ocean. For those who seek more than just a surfboard, Eduardo Bolioli offers a piece of art that’s as alive as the waves it rides.

Honoring the Memory: Reflecting on 9/11 and Its Legacy

Every year, the memory of September 11, 2001, stirs a collective remembrance that spans across the world. It’s a day forever etched in history—a day of unimaginable loss, but also of extraordinary courage and unity. The tragedy that unfolded that morning left an indelible mark on the hearts of all who witnessed it. Families were torn apart, heroes emerged, and an entire nation stood together in the face of devastation. For many, 9/11 is a reminder of the fragility of life and the strength of the human spirit. It’s not just about remembering what was lost, but also how communities came together in acts of kindness and resilience. As we reflect on this day, we honor the lives lost, the bravery of first responders, and the enduring message that even in the darkest of times, hope and unity can shine through. The legacy of 9/11 continues to shape us, reminding us to cherish every moment, embrace one another, and never forget.

Diamond Head: Oahu’s Crown Jewel of Adventure

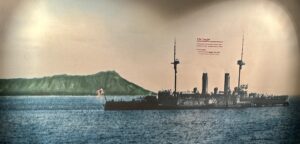

Rising majestically above the southeastern coast of Oahu, Diamond Head is more than just a stunning landmark—it’s a symbol of Hawaii’s natural beauty and adventurous spirit. Known as Lēʻahi in Hawaiian, this iconic volcanic crater has long been a beacon for travelers, offering breathtaking views and a glimpse into the island’s geological past. Hiking to the summit of Diamond Head is a rite of passage for many visitors, and the journey is as rewarding as the destination. The trail, though steep at times, winds through rugged terrain, tunnels, and old military bunkers, giving you a sense of the rich history embedded in these ancient rocks.

As you reach the top, the panoramic view that unfolds is nothing short of spectacular. From Waikiki’s sparkling shoreline to the vast expanse of the Pacific Ocean, the scenery is a vivid reminder of why Oahu is called “The Gathering Place.” But Diamond Head is more than just a hiking destination—it’s a place where nature, history, and culture intersect. The crater was formed over 300,000 years ago during a single volcanic eruption, and it has since played a role in everything from military defense to inspiring local legends.

Whether you’re an avid hiker, a history buff, or simply someone looking to connect with Hawaii’s raw beauty, Diamond Head offers an experience that is both humbling and exhilarating. Standing at the summit, with the island spread out before you, it’s easy to see why this ancient crater continues to captivate the hearts of all who visit.

Outrigger Canoe: The Soul of the Pacific

The outrigger canoe is more than just a vessel; it’s the embodiment of a rich cultural tradition that spans centuries and oceans. With its distinctive design featuring lateral support floats, or outriggers, this canoe has been a lifeline for Polynesians, guiding them across vast stretches of the Pacific long before modern navigation tools existed. These canoes were the very foundation of ancient oceanic exploration, enabling the first settlers to discover and populate the scattered islands of the Pacific, from Hawaii to New Zealand.

But the outrigger canoe is not just a relic of the past—it remains a vibrant part of island life today. In Hawaii, outrigger canoe paddling is more than a sport; it’s a community, a way to connect with the ocean and with each other. From competitive races that test strength and teamwork to leisurely paddles that offer a peaceful escape on the water, the outrigger canoe brings people together and keeps them connected to their roots.

Each stroke in an outrigger canoe is a tribute to the voyagers who navigated the open seas with nothing but the stars and their instincts. It’s a reminder of the resilience, skill, and deep respect for nature that have been passed down through generations. So, whether you’re paddling in a race, gliding along the shore, or simply admiring these beautiful vessels, you’re participating in a tradition that is as timeless as the waves themselves.

Duke Kahanamoku: The Wave That Changed the World

Duke Kahanamoku wasn’t just a man—he was a force of nature, a wave of aloha that carried the spirit of Hawaii across the globe. Known as the “Father of Modern Surfing,” Duke’s legacy is as vast and deep as the ocean he loved. Born in 1890 in Honolulu, Duke grew up with the sea as his playground, mastering the art of surfing long before it became a worldwide phenomenon. But his talents didn’t stop there; he was also an Olympic champion, winning five medals in swimming and shattering records along the way.

What set Duke apart wasn’t just his athletic prowess, but his unwavering aloha spirit. He introduced surfing to new shores, from California to Australia, becoming a global ambassador for the sport. Yet, even as his fame grew, Duke remained deeply connected to his Hawaiian roots. He was a symbol of humility and grace, a true embodiment of the values that define Hawaii. Duke’s influence extends far beyond the surf; he was a pioneer, a peacemaker, and a legend who inspired generations to come.

Today, when you catch a wave in Waikiki or see a statue of Duke, it’s more than just a tribute—it’s a reminder of a man who rode the waves and shared the aloha spirit with the world. Duke Kahanamoku’s legacy is a testament to the power of one person to make a lasting impact, not just on a sport, but on the world.

International Market Place: Where Waikiki’s Heartbeat Comes Alive

Nestled in the vibrant core of Waikiki, the International Market Place is more than just a shopping center—it’s a living, breathing celebration of Hawaiian culture, history, and community. From the moment you step under the ancient banyan tree that stands as the centerpiece, you’re enveloped in the rich heritage of Hawaii. The Market Place, with its open-air design, lush tropical landscaping, and carefully curated mix of high-end boutiques and local artisans, invites visitors to explore the best of both worlds.

What truly sets the International Market Place apart is its seamless blend of old and new. While you can indulge in world-class dining and find luxury brands, you’re also surrounded by nods to the site’s storied past. Once a gathering spot for Hawaiian royalty, this location has been transformed into a modern hub that still honors its roots. The Market Place hosts cultural performances and events that give visitors a taste of the authentic aloha spirit, making it a must-visit destination for anyone who wants to experience the true essence of Waikiki.

Whether you’re hunting for a unique souvenir, dining under the stars, or simply soaking in the atmosphere, the International Market Place offers something for everyone. It’s not just a place to shop—it’s a place to connect with the history and spirit of Hawaii. So next time you’re in Waikiki, take a stroll through this iconic marketplace and let it sweep you away into the heart of paradise.

Lava Flow: A Taste of Paradise in Every Sip

When it comes to tropical cocktails, nothing says “island vibes” quite like a Lava Flow. This vibrant, visually striking drink is a delicious fusion of creamy coconut, ripe pineapple, and fresh strawberries, creating a refreshing treat that feels like a vacation in a glass. The Lava Flow is as much a feast for the eyes as it is for the taste buds, with its layered red and white appearance mimicking the dramatic flow of molten lava against a pristine beach. But don’t let its fiery name fool you—this cocktail is all about cool, laid-back sipping.

The beauty of the Lava Flow lies in its simplicity. The base is a smooth blend of coconut cream and pineapple juice, spiked with a generous splash of rum to give it that classic tropical punch. The “lava” effect is created by blending fresh strawberries with a bit of rum and gently pouring the mixture into the glass, allowing it to swirl and mix with the coconut blend. The result is a stunning, marbled drink that’s perfect for sipping by the pool or imagining you’re somewhere sunny and warm.

Whether you’re hosting a summer party, lounging by the beach, or just want to escape to a tropical paradise from the comfort of your own home, the Lava Flow is the ultimate drink to transport you to a place where the sun always shines. So next time you’re in the mood for something sweet and summery, shake up a Lava Flow and let the island breeze carry you away.

Discovering the Hidden Gems Around Waikiki Beach

Waikiki Beach is often synonymous with sun-soaked shores, vibrant surf culture, and the iconic backdrop of Diamond Head. But just beyond the famous sands, there’s a world of hidden gems waiting to be discovered. From serene parks and historic landmarks to bustling markets and local eateries, the areas surrounding Waikiki offer a rich tapestry of experiences that go beyond the typical tourist trail.

Take a stroll through Kapiʻolani Park, a lush oasis where locals and visitors alike come to relax and enjoy the natural beauty of Oahu. Or explore the historic Moana Surfrider, a grand hotel that has stood the test of time and still exudes the charm of old Hawaii. For a taste of local culture, visit the bustling Waikiki Beach Walk, where you’ll find an array of shops, restaurants, and live entertainment that showcase the island’s unique blend of tradition and modernity.

As you wander through the streets and trails around Waikiki, you’ll uncover the true spirit of Hawaii—a place where the past and present coexist harmoniously, and where every corner has a story to tell. Whether you’re a first-time visitor or a seasoned traveler, these sites around Waikiki Beach will make you fall in love with Oahu all over again.

Navigating Leadership Styles: Finding the Right Fit for Your Team

Leadership is not a one-size-fits-all concept; rather, it’s a dynamic field with various styles, each suited to different situations and teams. Understanding these diverse types of leadership can help you navigate your role more effectively and lead your team to success.

One popular leadership style is the transformational leader, who inspires and motivates their team by creating a vision for the future and encouraging innovation. Transformational leaders are known for their ability to energize their team, fostering an environment where creativity and enthusiasm flourish. They focus on personal growth and often lead by example, driving change through their commitment and passion.

In contrast, transactional leaders emphasize structure and routine. They focus on setting clear goals, establishing procedures, and rewarding or correcting performance based on results. This style is effective in situations where stability and efficiency are key, and it works well in environments where tasks are well-defined and adherence to processes is crucial.

Servant leadership offers another approach, prioritizing the needs and development of team members above all else. Servant leaders focus on empowering and supporting their team, often taking a more collaborative approach. This style builds trust and fosters a positive work environment, where employees feel valued and motivated to contribute their best.

Autocratic leadership is characterized by a top-down approach, where decisions are made by the leader with little input from team members. This style can be effective in high-pressure situations where quick decisions are needed, but it may also lead to a lack of team engagement and morale if overused.

On the other end of the spectrum, democratic leadership encourages participation and input from team members in decision-making processes. This approach helps build a sense of ownership and engagement, as team members feel their opinions are valued and considered. It’s especially effective in creative and collaborative environments where diverse perspectives enhance problem-solving.

Finally, laissez-faire leadership adopts a hands-off approach, allowing team members a high degree of autonomy to make their own decisions. This style can work well with highly skilled and motivated teams who require minimal direction. However, it may lead to a lack of coordination or clarity if not managed properly.

Each leadership style has its strengths and is best suited to different contexts and team dynamics. By understanding and adapting these styles, leaders can enhance their effectiveness and drive their teams toward greater success. Embracing the right leadership approach for your situation can make all the difference in achieving your goals and fostering a thriving work environment.

Unlocking Your Potential: The Career-Boosting Power of a Growth Mindset

In today’s fast-paced world, the secret to long-term success often lies not in talent alone, but in the mindset you bring to the table. Embracing a growth mindset—the belief that your abilities can be developed through hard work, learning, and resilience—can be the key to unlocking your full potential and propelling your career forward.

A growth mindset is more than just positive thinking; it’s a fundamental shift in how you view challenges, failures, and opportunities. Instead of seeing your skills and intelligence as fixed traits, a growth mindset encourages you to see them as malleable, something that can be honed and expanded with effort and perseverance. This perspective transforms obstacles into opportunities for learning and growth, enabling you to push beyond your current limits.

In the workplace, a growth mindset can make all the difference. It fuels your willingness to take on new challenges, even when success isn’t guaranteed. This approach not only makes you more adaptable in the face of change but also opens up new avenues for professional development. Employers value individuals who are eager to learn, take initiative, and bounce back from setbacks with renewed determination. With a growth mindset, you position yourself as a valuable asset—someone who is not just skilled, but constantly evolving and improving.

Moreover, a growth mindset fosters a culture of collaboration and innovation. When you believe that you and your colleagues can grow and improve, you’re more likely to support each other’s efforts, share knowledge, and work together to achieve common goals. This collaborative spirit can lead to breakthroughs that benefit both your personal career and your organization as a whole.

But how do you cultivate a growth mindset? It starts with self-awareness and a willingness to challenge your own assumptions. Embrace feedback as a tool for growth rather than criticism. Set learning goals that push you out of your comfort zone, and celebrate progress as much as outcomes. Surround yourself with people who inspire you to strive for more, and remember that every step forward, no matter how small, is a victory on the path to reaching your full potential.

By adopting a growth mindset, you’re not just enhancing your career prospects—you’re investing in a lifelong journey of personal and professional development. The power to grow, learn, and succeed is within you; all it takes is the belief that you can.

Labor Day in the U.S.: Celebrating the Spirit of Work and Workers

Labor Day in the United States, observed on the first Monday of September, is more than just a long weekend marking the unofficial end of summer. It’s a celebration of the American labor movement and a tribute to the contributions of workers across the nation. This federal holiday has evolved into a day of rest and recognition, highlighting the achievements and rights of those who drive the economy and build communities.

Historically, Labor Day emerged from the labor movement of the late 19th century, a time when workers were fighting for fair wages, better working conditions, and reasonable hours. The holiday was first celebrated in 1882, spearheaded by the Central Labor Union in New York City, and it was officially recognized as a federal holiday in 1894 following a series of labor strikes and unrest. Today, it stands as a reminder of the struggles and successes that have shaped workers’ rights and labor policies.

While the origins of Labor Day are rooted in advocacy and activism, the modern celebration often revolves around leisurely activities and family gatherings. Many people take advantage of the long weekend for barbecues, picnics, and trips to the beach. Parades and community events are common, providing an opportunity for people to come together and enjoy the final days of summer.

Despite the more relaxed atmosphere, Labor Day remains a poignant occasion for reflecting on the progress made in labor rights and acknowledging the ongoing challenges faced by workers. It’s a time to honor the hard work and dedication of those in various fields, from manufacturing to service industries, who contribute to the nation’s prosperity.

In essence, Labor Day serves as both a celebration and a reminder. It’s a chance to relax and enjoy the fruits of hard work while also recognizing the importance of continued efforts to improve working conditions and advocate for workers’ rights. As we enjoy the holiday, it’s worth taking a moment to appreciate the significant role that labor plays in shaping our lives and our society.

A Bridge Across Oceans: The U.S. Embassy in Wellington, New Zealand

Nestled in the heart of New Zealand’s capital, the U.S. Embassy in Wellington serves as a vital link between two nations separated by thousands of miles but united by shared values and interests. This diplomatic mission is more than just a building; it’s a hub of cultural exchange, policy collaboration, and mutual support that strengthens the bond between the United States and New Zealand.

The U.S. Embassy in Wellington plays a multifaceted role in the bilateral relationship between these two countries. It’s where American and Kiwi officials work together on a range of issues, from trade and security to environmental conservation and education.

Beyond its official duties, the embassy is deeply engaged in fostering people-to-people connections. Through various programs and events, it promotes cultural exchange, bringing Americans and New Zealanders closer together. Whether it’s showcasing American films, hosting educational workshops, or supporting community projects, the embassy helps bridge the cultural gap and build lasting friendships across the Pacific.

The embassy also plays a crucial role in supporting U.S. businesses in New Zealand, helping to open doors for American companies and facilitating economic ties that benefit both nations. In an increasingly globalized world, these economic connections are more important than ever, contributing to prosperity on both sides of the ocean.

But the work of the U.S. Embassy in Wellington goes beyond diplomacy and commerce. It’s about building trust, understanding, and cooperation between two countries that, despite their geographical distance, share a commitment to democracy, human rights, and global peace. Through its efforts, the embassy ensures that the partnership between the United States and New Zealand remains strong and dynamic, paving the way for a future of continued collaboration and friendship.

The Spanish Inquisition: A Dark Chapter in History

The Spanish Inquisition stands as one of the most infamous periods in history, a time when fear, persecution, and religious intolerance cast a long shadow over Spain and its territories. Established in 1478 by Catholic Monarchs Ferdinand II of Aragon and Isabella I of Castile, the Inquisition was originally intended to maintain Catholic orthodoxy in their kingdoms. However, it quickly evolved into a tool for controlling and suppressing dissent, leading to centuries of terror that left a lasting scar on the Spanish conscience.

The primary targets of the Spanish Inquisition were conversos—Jews and Muslims who had converted to Christianity, often under duress—as well as heretics, Protestants, and anyone suspected of harboring beliefs contrary to the Catholic Church’s teachings. Accusations of heresy could be based on the flimsiest of evidence, and the accused often faced brutal interrogations, imprisonment, and torture in the quest to extract confessions. The Inquisition’s trials were notoriously secretive, and those found guilty faced severe punishments, ranging from public penance to execution by burning at the stake.

The Inquisition’s reach extended beyond religious matters, influencing every aspect of Spanish society. It was a powerful tool for the monarchy to enforce political control, silence opposition, and promote conformity. Fear of the Inquisition stifled intellectual and cultural expression, leading to a climate of suspicion and repression. The arts, literature, and sciences were all impacted as the Inquisition sought to eliminate any ideas that threatened its authority.

Despite its horrors, the Spanish Inquisition persisted for centuries, finally coming to an end in 1834. By then, it had claimed the lives of thousands and left an indelible mark on Spanish history. Today, the Spanish Inquisition is remembered as a cautionary tale about the dangers of unchecked power and the persecution of those who dare to think differently.

This dark chapter serves as a reminder of the importance of tolerance, freedom of thought, and the protection of human rights. It is a stark example of how fear and fanaticism can lead to devastating consequences, and why it is crucial to safeguard the values of justice and compassion in any society.